Aptx-HD Principles and Explanation

Sound and Frequency

In order to have a greater understanding of aptX-HD and how this audio codec affects wireless streaming of audio devices, we must first look at how the human ear interprets sound. This is important as the principles of the ears function are directly related to how audio codecs function.

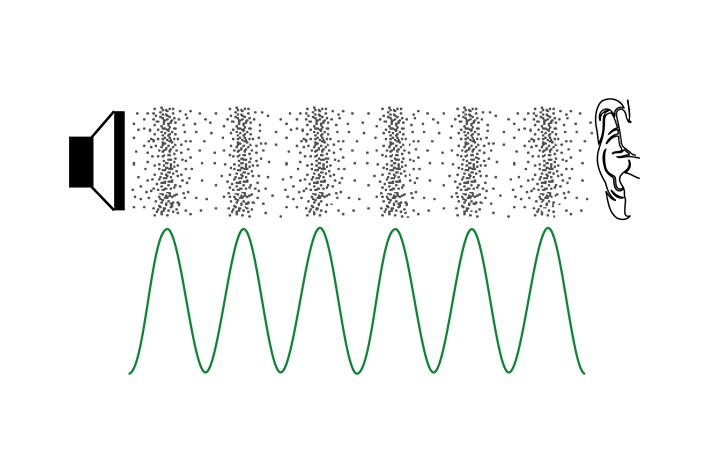

In physics, sound is a vibration. This vibration takes the form of an audible wave of pressure, that can be transmitted through a medium such as a gas, liquid or solid. Sound travels in longitudinal waves, this means the particles in the medium (gas, liquid or solid) are moved in the same or the opposite direction to the travelling wave. This causes increases and decreases in pressure known as compression when the pressure is increased and rarefaction when the pressure is decreased. (see figure A)

In physics, sound is a vibration. This vibration takes the form of an audible wave of pressure, that can be transmitted through a medium such as a gas, liquid or solid. Sound travels in longitudinal waves, this means the particles in the medium (gas, liquid or solid) are moved in the same or the opposite direction to the travelling wave. This causes increases and decreases in pressure known as compression when the pressure is increased and rarefaction when the pressure is decreased. (see figure A)

Figure A. Sound wave demonstrating compression and rarefaction.

Frequency in humans

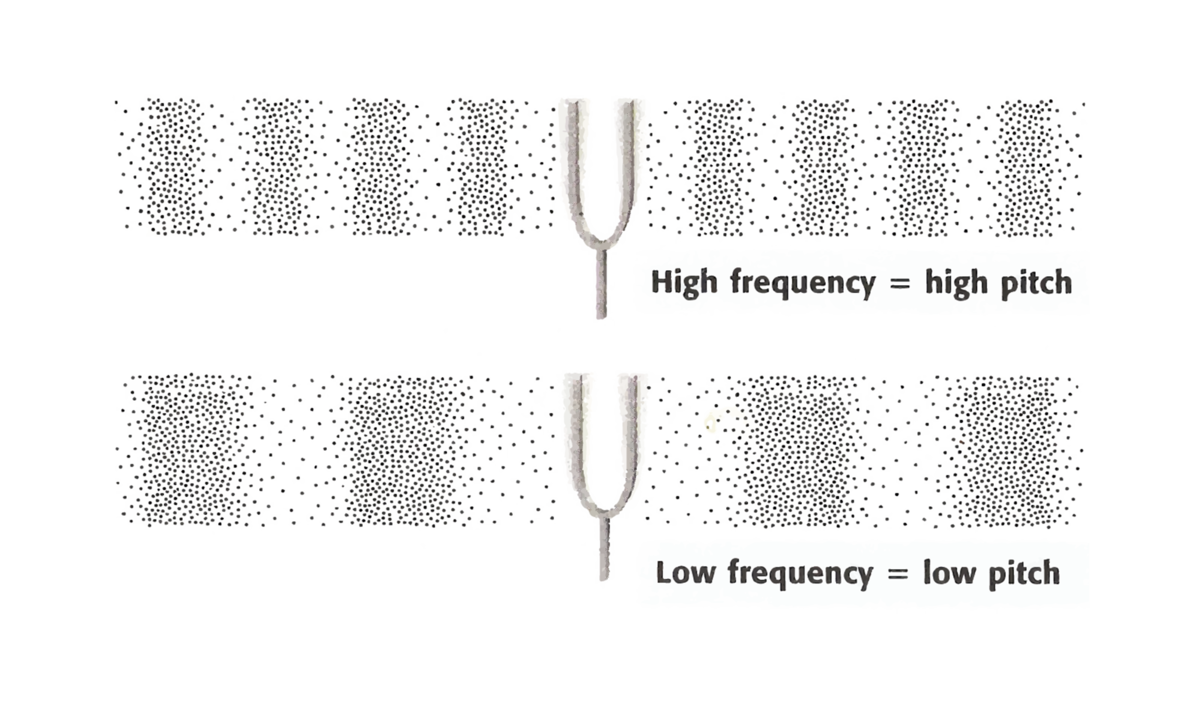

Another important factor with sound in humans is the frequency. Audio frequency is understood as a periodic vibration with a frequency that is perceivable by the average human. Frequency is measured in hertz, Hz, is the SI unit of frequency. The frequency of a sound wave determines its tone and pitch. The frequency range of young people is about 20 to 20,000 hertz or 20 kHz. (see figure B)

Figure B. Particle displacement for high and low frequency.

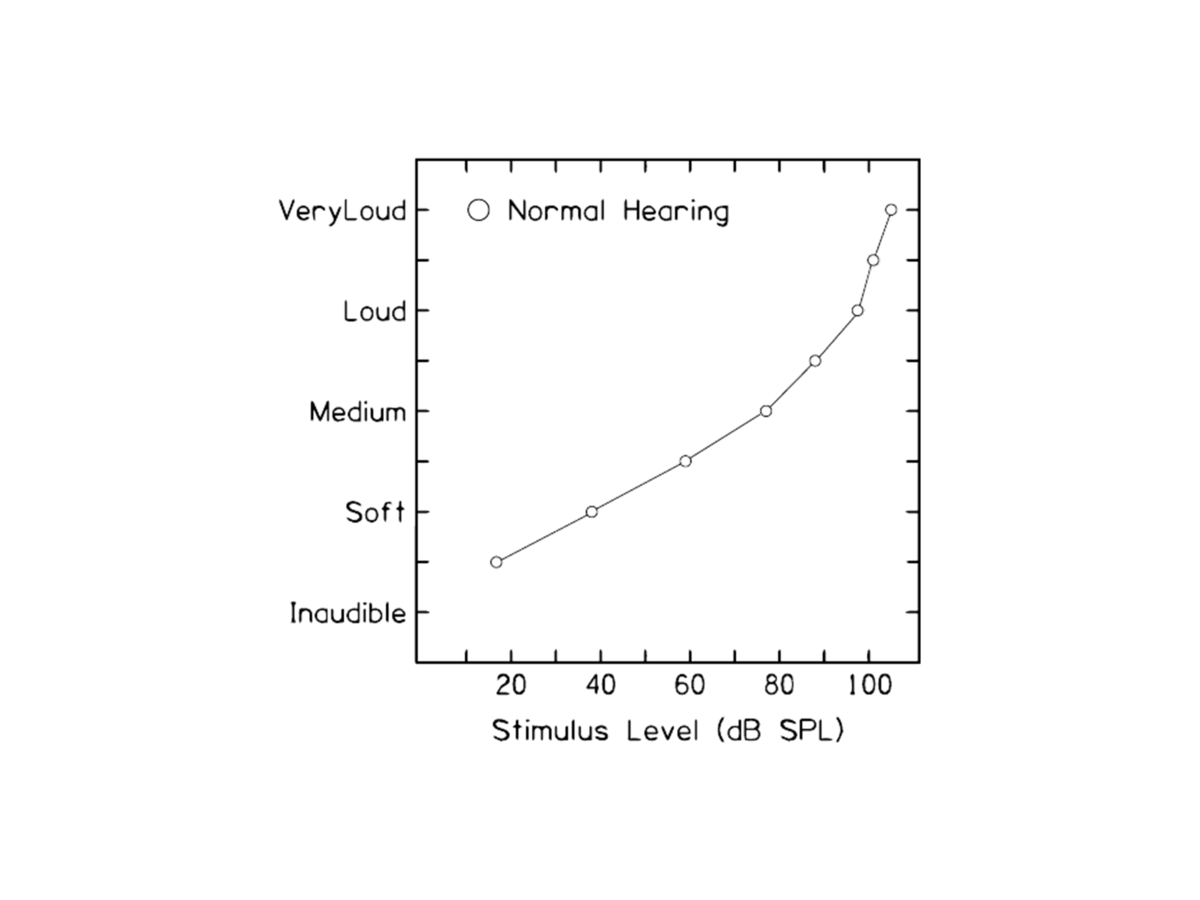

Human Dynamic Range

The human ear is able to perceive many of the sounds produced in nature, but by no means all of them. Any frequency below 20 Hz such as a heart beat with a frequency of 1 or 2 Hz, is known as infrasound. These sounds are so low that they can only be heard by creatures with big ears, such as an Elephant. On the other hand, any frequency above the human hearing range is known as ultrasound. Many animals use ultrasound for navigation, such as bats, whales and dolphins.

Figure C. Dynamic range of human hearing.

The Human Ear

In humans, sound is the receiving of these waves at the ear and the perception of these waves by the brain. The range between the quietist and loudest sounds is known as the dynamic range. The dynamic range of human hearing is roughly 140 dB from the the quietist perceivable sounds known as the threshold of hearing (around -9 dB SPL at 3 kHz), to the loudest sounds known as the threshold of pain (from 120 dB-140 dB SPL). This wide dynamic range however cannot be perceived all at once. The human ear is divided into many parts that all have a specific task. The tensor tympani, stapedius muscle and the outer hair cells all act as mechanical dynamic range compressors to adjust the sensitivity of the ear to different ambient levels. Essentially these parts of the ear act to tighten the volume of sound reaching the ear so that the brain perceives the correct frequencies.

The dynamic range of music normally perceived in a concert hall will rarely exceed 80 dB, and human speech is usually perceived over a range of about 40 dB.

The dynamic range of music normally perceived in a concert hall will rarely exceed 80 dB, and human speech is usually perceived over a range of about 40 dB.

Figure D. Anatomy of human ear.

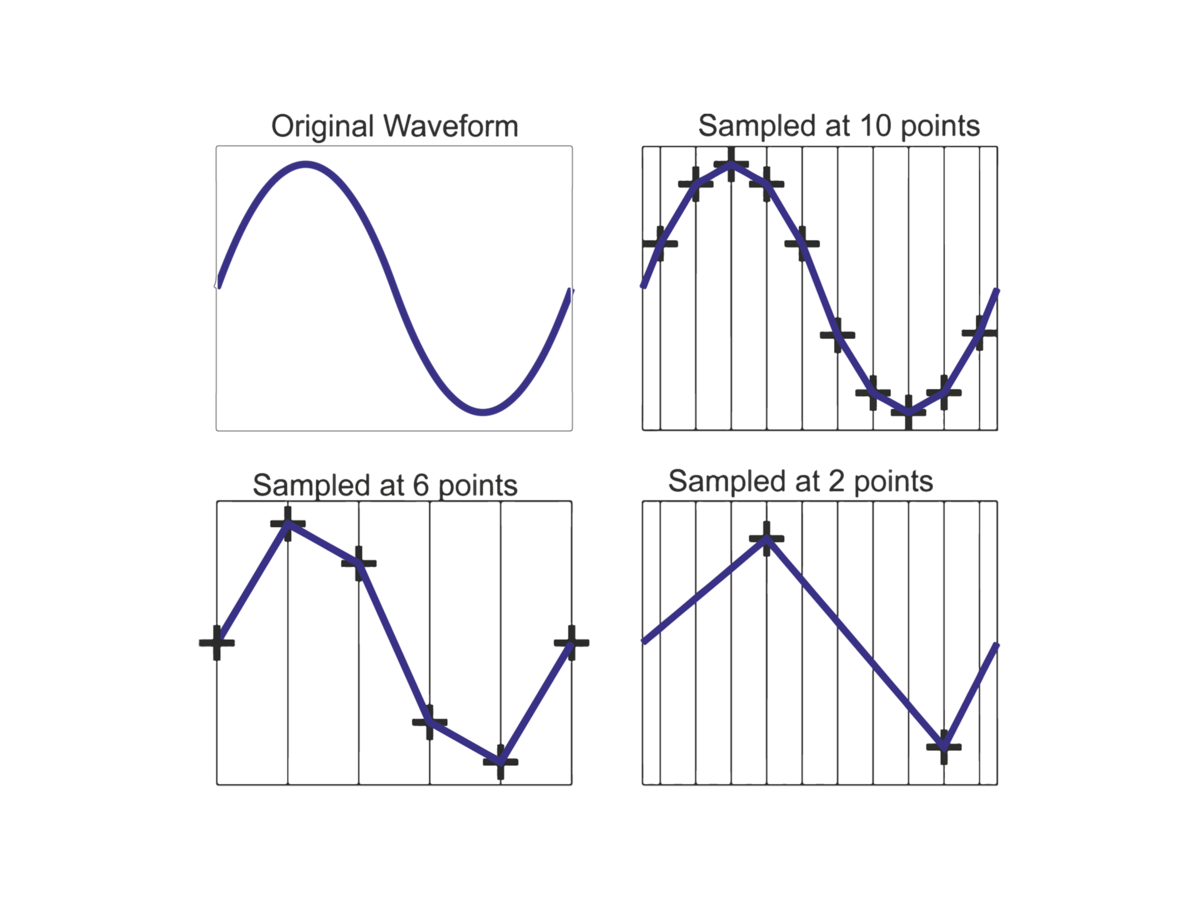

Sample rates

In order to convert analogue audio signals (music) into the digital domain (CD, MP3), a rapid series of short ‘snapshots’ are captured, this is known as sampling. Each sample contains the data that makes up the original analogue signal, this includes the dynamic range and the frequency of the signal. The sample rate therefore is the amount of these ‘snapshots’ that are recorded every second (see figure E). The sample rates can vary depending of the purpose of the product, for CDs and MP3s the sample rate is 44.1 kHz. This means that every second 44,100 ‘snapshots’ are taken. This sample rate is used because humans only hear up to 20,000 Hz and underpinning theory into sampling suggests that the sample rate must be twice the frequency of the signal being sampled.

Figure E. Basic example of different sampling rates.

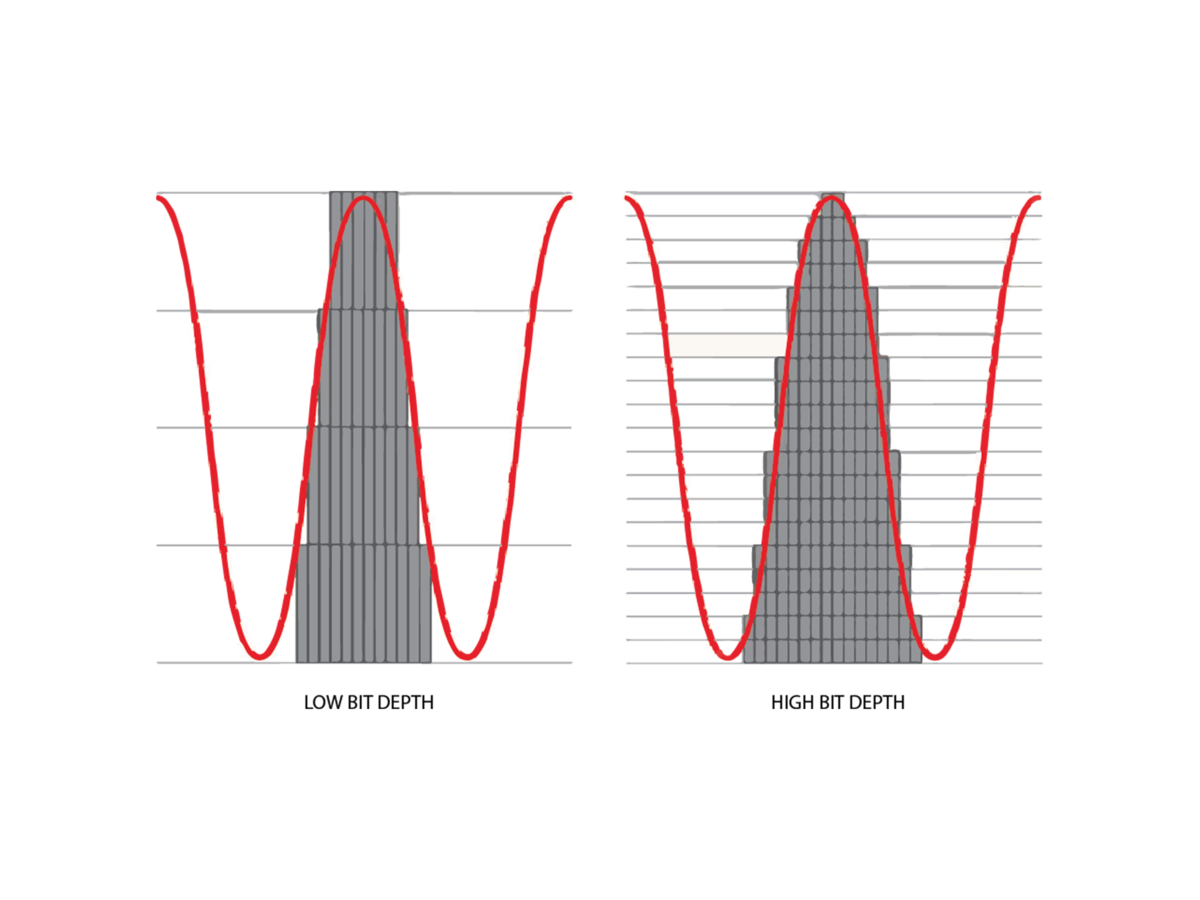

Word length (bit depths) and Bit Rates

The word length (incorrectly described as bit depth) is simply the number of increments used to show the amplitude of a signal. If it was an 8-bit system this would provide 8 increments, so every single sample would be assigned to the nearest increment as shown below on the graphic (see figure F). This is known as quantisation. The greater the word length, the greater the dynamic range, as this gives each sample more increments in terms of the exact amplitude of that sample in time.

The bit rate, which is expressed in thousands of bits per second (kbps) is a measure of the amount of data required to represent one second of audio. The higher the bit rate, the more data per second of music. A higher bitrate creates better quality audio along with a larger file. An example for calculating the bit rate of CD-quality stereo audio is given below.

16 (bits) x 44,100 (sample rate) x 2 (two stereo channels) = 1411.2 kbps

Figure F. Examples of low and high bit-depth systems. Low bit depth is equal to four. High bit depth is equal to twenty.

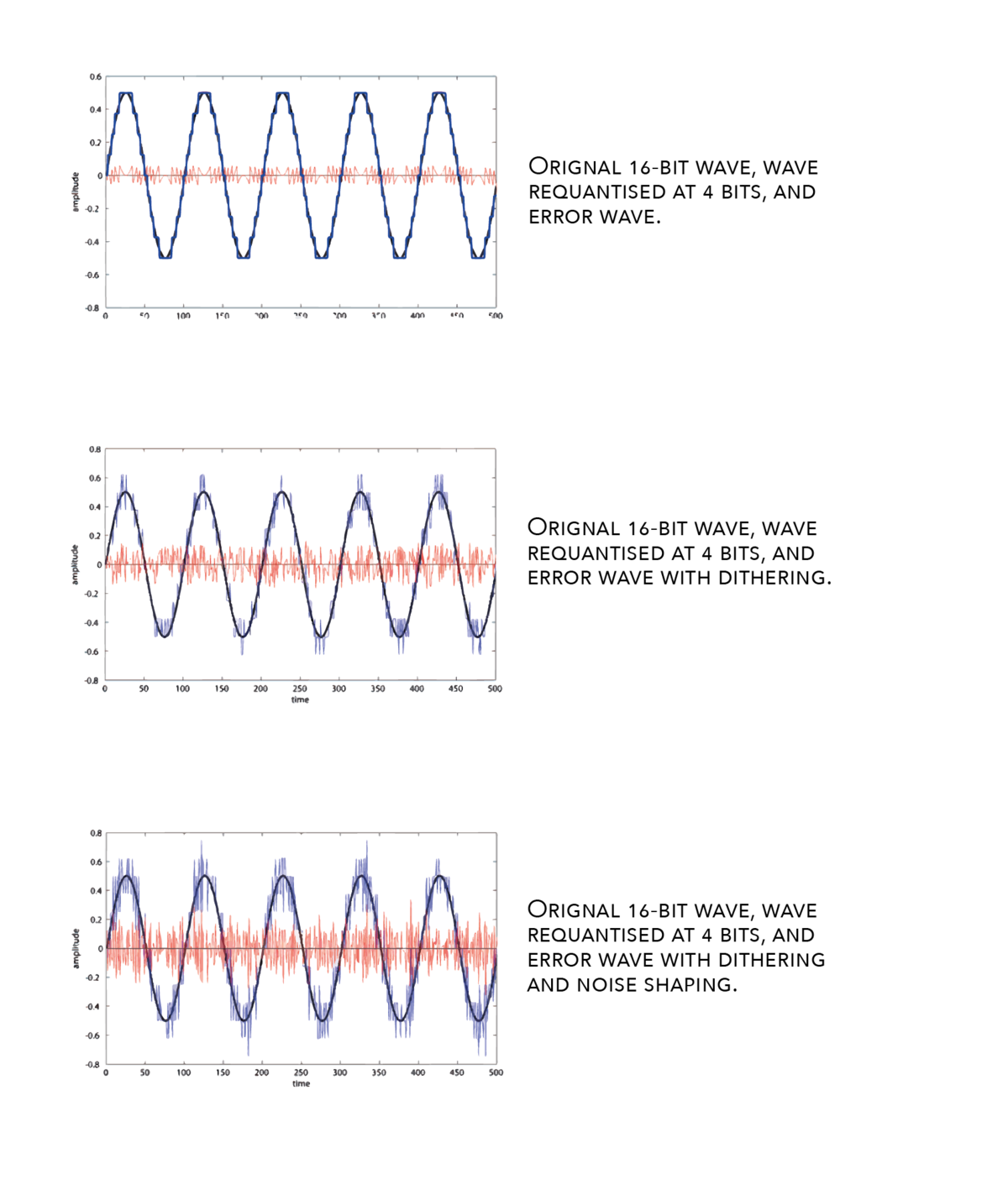

Digital Principles

When looking at the 16-bit CD (Compact Disc), the theoretical dynamic range is about 96 dB, however, the perceived dynamic range of 16-bit audio can be 120 dB or more due to the processes applied during the coding process as shown in figure G. The main process applied adds noise shaping and dither to a signal. When only dither is applied the noise added is spread over all frequencies but when noise-shaping is added to the dither, the noise is less present in low frequencies and more noise is present in the high frequencies. The idea is that the noise is filtered to a frequency range less perceivable to human hearing.

When looking at digital audio, a 20-bit system is potentially capable of achieving 120 dB dynamic range. 24-bit digital audio calculates to 144 dB dynamic range. This suggests that either a 20-bit or 24-bit system achieves the same lower and upper limits of dynamic range as that of human hearing. When looking at digital audio workstations, most apply a 32-bit floating-point system which in turn allows for even higher dynamic range and because of this improved process loss of dynamic range is not of concern in digital audio processing anymore.

The final point raised is an important one, because when analogue signals (music) were first recorded to digital devices (PC’s, DAW’s) the low bit systems often couldn’t represent the full dynamic range. In turn the final digital version of the recording would have less of a range between the quietist and loudest sounds, which could potentially affect the quality of listening for the final audience.

When looking at digital audio, a 20-bit system is potentially capable of achieving 120 dB dynamic range. 24-bit digital audio calculates to 144 dB dynamic range. This suggests that either a 20-bit or 24-bit system achieves the same lower and upper limits of dynamic range as that of human hearing. When looking at digital audio workstations, most apply a 32-bit floating-point system which in turn allows for even higher dynamic range and because of this improved process loss of dynamic range is not of concern in digital audio processing anymore.

The final point raised is an important one, because when analogue signals (music) were first recorded to digital devices (PC’s, DAW’s) the low bit systems often couldn’t represent the full dynamic range. In turn the final digital version of the recording would have less of a range between the quietist and loudest sounds, which could potentially affect the quality of listening for the final audience.

Figure G. Comparison of requantization without dithering, with dithering, and with dithering and noise shaping.

Lossy/Lossless Compression

In order to store a large amount of digital audio files (MP3s) on low power mobile devices (mobiles, tablets, laptops) the original audio files must be reduced in size. This is required otherwise you wouldn’t get many songs on your devices.

There are two ways to achieve this and they are known as lossless and lossy compression.

Lossless compression (WAV, FLAC) allows for all of the original data to be recovered whenever the file is uncompressed again whilst significantly reducing the file size.

Lossy compression (MP3, AAC) works differently as it ends up eliminating all the “unnecessary” bits and pieces of information in the original file to make it even smaller when compressed. This works by applying techniques based upon how our brain interprets sound and removes harmonics and other frequency content that would not be perceived by our brain. Lossy techniques can reduce the original file size to a tenth whilst maintaining an almost identical file.

There are two ways to achieve this and they are known as lossless and lossy compression.

Lossless compression (WAV, FLAC) allows for all of the original data to be recovered whenever the file is uncompressed again whilst significantly reducing the file size.

Lossy compression (MP3, AAC) works differently as it ends up eliminating all the “unnecessary” bits and pieces of information in the original file to make it even smaller when compressed. This works by applying techniques based upon how our brain interprets sound and removes harmonics and other frequency content that would not be perceived by our brain. Lossy techniques can reduce the original file size to a tenth whilst maintaining an almost identical file.

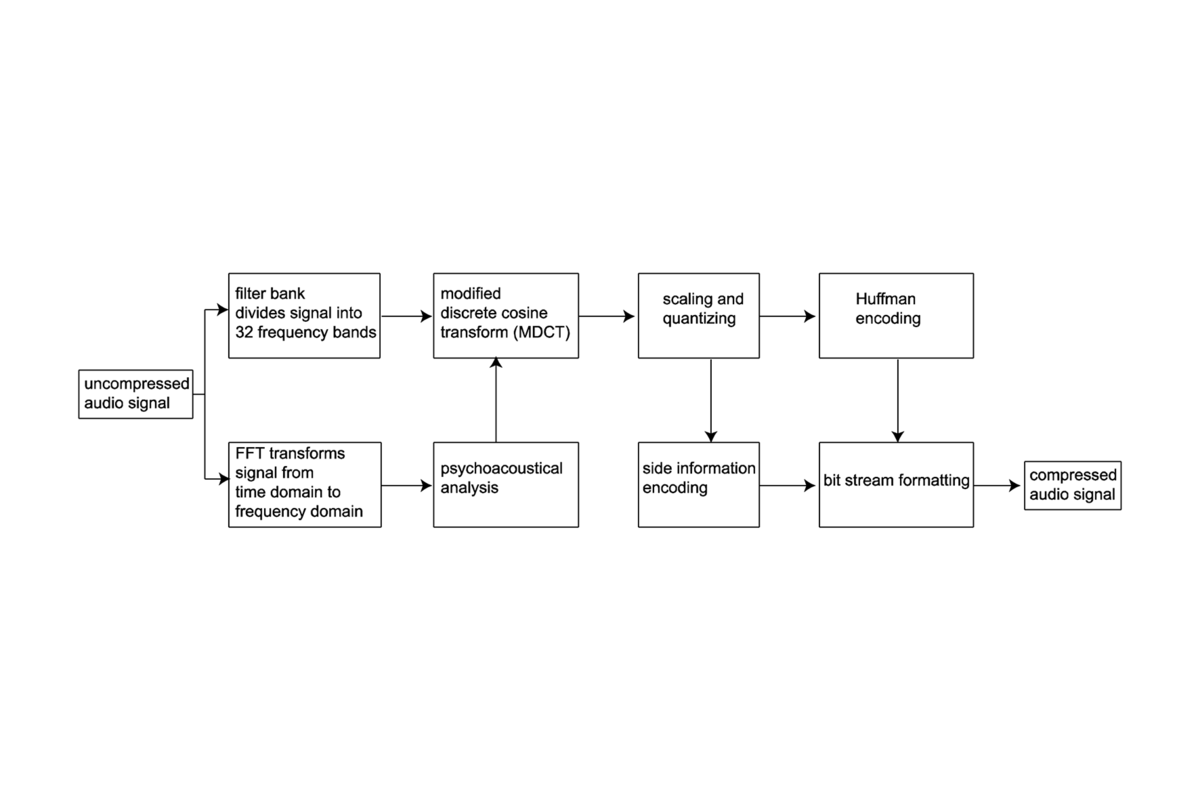

Figure H. Encoding/decoding process for MP3 audio.

aptX-HD explained

Explained below are some the main features of aptX-HD and how this differs from other lossless codecs.

aptX-HD (also known as aptX Lossless), has a bit-rate of 576 kbps. This allows for high definition audio up to 48 kHz sampling rates and word lengths (bit depths) up to 24 bits. Thus providing sufficient detail in representing the analogue signal in digital form. Even though the name of the codec suggests it is lossless, it technically is still lossy. This is because it uses ‘near lossless’ coding for parts of the audio where it is impossible to apply lossless coding. ‘Near lossless’ coding is applied in these particular situations because there is only a limited amount of space to transmit digital signals. This is known as bandwidth and due to the bandwidth being a finite size applying fully lossless coding all the time would require a larger bandwidth to transmit the information. ‘Near lossless’ coding maintains high-definition qualities such as a dynamic range of at least 120 dB and still represents audio frequencies up to 20 kHz.

aptX-HD (also known as aptX Lossless), has a bit-rate of 576 kbps. This allows for high definition audio up to 48 kHz sampling rates and word lengths (bit depths) up to 24 bits. Thus providing sufficient detail in representing the analogue signal in digital form. Even though the name of the codec suggests it is lossless, it technically is still lossy. This is because it uses ‘near lossless’ coding for parts of the audio where it is impossible to apply lossless coding. ‘Near lossless’ coding is applied in these particular situations because there is only a limited amount of space to transmit digital signals. This is known as bandwidth and due to the bandwidth being a finite size applying fully lossless coding all the time would require a larger bandwidth to transmit the information. ‘Near lossless’ coding maintains high-definition qualities such as a dynamic range of at least 120 dB and still represents audio frequencies up to 20 kHz.

Another adaptable feature of aptX-HD is the coding latency. Coding latency simply is the delay it takes for the information to be converted from an analogue signal to a digital signal. The coding latency of aptX-HD can be scaled as low as 1 ms for 48 kHz sampled audio, dependent on other settings of the codec.

aptX-HD performs very well compared with other lossless compression formats, as long as the coding latency is kept to a maximum of 5 ms or less. This makes aptX-HD particularly useful for delay-sensitive interactive audio applications such as wireless headphones. This is of particular importance where the audio information is streamed from a user’s mobile device (mobile, tablet, PC) to the output (wireless headphones). A low latency, means that the user should experience no problems in terms of a delay between pressing play and receiving audio at the headphones. This is regardless of whether it is a small MP3 (lossy) file or a slightly larger WAV (lossless) file.

aptX-HD performs very well compared with other lossless compression formats, as long as the coding latency is kept to a maximum of 5 ms or less. This makes aptX-HD particularly useful for delay-sensitive interactive audio applications such as wireless headphones. This is of particular importance where the audio information is streamed from a user’s mobile device (mobile, tablet, PC) to the output (wireless headphones). A low latency, means that the user should experience no problems in terms of a delay between pressing play and receiving audio at the headphones. This is regardless of whether it is a small MP3 (lossy) file or a slightly larger WAV (lossless) file.

aptX-HD and many other lossless codecs require less computer processing power when compared to well-known lossy codecs, such as MP3 and AAC. aptX-HD achieves this low processing power by using the simplest coding method possible whilst balancing other operating factors such as the coding delay and level of compression. This is especially important in the case of wireless headphones, as this means the battery life of the device will not be as affected. Therefore the battery will last longer than other wireless headphones with the same size battery that don’t use the aptX-HD chip. Depending on the settings applied within aptX-HD, it can encode a 48 kHz 16-bit stereo stream using only 10 MIPS (millions of instructions per second) on a modern processor. The decoder at the other end only uses 6 MIPS on the same platform, which further goes to show that the computer processing has been reduced.

Additional information such as metadata and synchronisation data can be embedded into aptX-HD at variable rates. As the rate in which the data is embedded is variable, it allows in the event of data being corrupted or lost to resynchronise in order to maintain a high QoS (Quality of Service). Dependent on the settings applied within the decoding process, resynchronisation can occur within 1-2 ms.

Additional information such as metadata and synchronisation data can be embedded into aptX-HD at variable rates. As the rate in which the data is embedded is variable, it allows in the event of data being corrupted or lost to resynchronise in order to maintain a high QoS (Quality of Service). Dependent on the settings applied within the decoding process, resynchronisation can occur within 1-2 ms.